Validation of core competencies during residency training in anaesthesiology

Heiderose Ortwein 1Michel Knigge 2

Benno Rehberg 1

Ortrud Vargas Hein 1

Claudia Spies 1

1 Department of Anaesthesiology and Intensive Care Medicine, Charité – Universitätsmedizin Berlin, Campus Virchow Klinikum and Campus Mitte, Berlin, Germany

2 Institute for Educational Progress, Humboldt-Universität zu Berlin, Germany

Abstract

Background and goal: Curriculum development for residency training is increasingly challenging in times of financial restrictions and time limitations. Several countries have adopted the CanMEDS framework for medical education as a model into their curricula of specialty training. The purpose of the present study was to validate the competency goals, as derived from CanMEDS, of the Department of Anaesthesiology and Intensive Care Medicine of the Berlin Charité University Medical Centre, by conducting a staff survey. These goals for the qualification of specialists stipulate demonstrable competencies in seven areas: expert medical action, efficient collaboration in a team, communications with patients and family, management and organisation, lifelong learning, professional behaviour, and advocacy of good health. We had previously developed a catalogue of curriculum items based on these seven core competencies. In order to evaluate the validity of this catalogue, we surveyed anaesthetists at our department in regard to their perception of the importance of each of these items. In addition to the descriptive acquisition of data, it was intended to assess the results of the survey to ascertain whether there were differences in the evaluation of these objectives by specialists and registrars.

Methods: The questionnaire with the seven adapted CanMEDS Roles included items describing each of their underlying competencies. Each anaesthetist (registrars and specialists) working at our institution in May of 2007 was asked to participate in the survey. Individual perception of relevance was rated for each item on a scale similar to the Likert system, ranging from 1 (highly relevant) to 5 (not at all relevant), from which ratings means were calculated. For determination of reliability, we calculated Cronbach’s alpha. To assess differences between subgroups, we performed analysis of variance.

Results: All seven roles were rated as relevant. Three of the seven competency goals (expert medical action, efficient collaboration in a team, and communication with patients and family) achieved especially high ratings. Only a few items differed significantly in their average rating between specialists and registrars.

Conclusions: We succeeded in validating the relevance of the adapted seven CanMEDS competencies for residency training within our institution. So far, many countries have adopted the Canadian Model, which indicates the great practicability of this competency-based model in curriculum planning. Roles with higher acceptance should be prioritised in existing curricula. It would be desirable to develop and validate a competency-based curriculum for specialty training in anaesthesiology throughout Germany by conducting a national survey to include specialists as well as registrars in curriculum development.

Keywords

clinical competence, physicians, medical education, questionnaires, attitude of health personnel, curriculum

Introduction

Both quality and quality management of residency training are currently being discussed in several medical associations in Germany. Within recent years, a number of surveys addressing quality of residency training in anaesthesiology were conducted among registrars and specialists working at German hospitals [1], [2], [3], [4]. A survey among 770 registrars at German hospitals revealed that only a third of the participating registrars were trained according to a structured curriculum [4]. This situation is unacceptable, especially since it is assumed that well-structured residency curricula facilitate more effective learning, followed by enhanced clinical practice in future generations of physicians [5], [6]. In 2009, the German Medical Association (Bundesaerztekammer) initiated evaluation of residency training in all medical subspecialties. The low response rate of 32.8% (with anaesthesiology approx. 40%) has been criticised. In order to improve reliability, a future evaluation will be compulsory for all hospitals training registrars [7]. Quality was rated analogously to German school marks: i.e., from 1, for very good to 6, for poor. Although the low response rate restricts interpretation, this evaluation revealed a relatively good overall rating of 2.54 (e.g., “I’m happy with my working environment”, and “I would recommend my training program”). Nevertheless, registrars trained in anaesthesiology rated their training worse than average in the following seven out of eight global factors: global rating, teaching of expert medical knowledge, learning culture, leadership, decision-making, workplace environment, and application of evidence-based medicine. Only the eighth global factor – management of medical errors – received higher-than-average ratings from registrars in anaesthesiology [8], [9].

Many countries have developed a structured curriculum for various levels of training (e.g., for specialties and subspecialties) in order to assure high quality of future health care [10], [11], [12], [13]. Such curricula focus on outcomes and their description. These outcome-based curricula describe competencies to be achieved by registrars by the end of their training. Processes resulting in these outcomes may vary individually and among institutions [14].

Various groups should be included in the process of curriculum development, and should at least involve registrars and specialists – since they are the ones who will transfer curriculum content into practice. Additionally, patients as well as representatives of other professions delivering health may likewise be involved in curriculum planning. Participation in curriculum development enhances the transparency of outcomes expected from future specialists [15], [16], [17]. It remains unknown whether registrars and specialists are equally capable of evaluating outcomes and learning objectives.

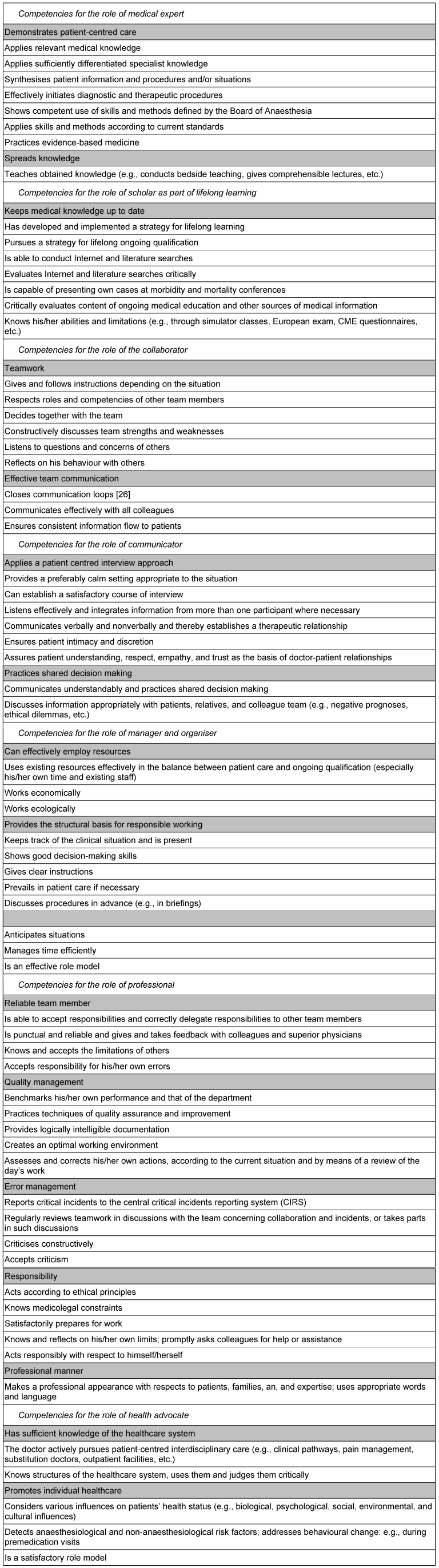

To develop a competency-based curriculum, we initially defined learning outcomes of anaesthesiology residency training at our institution. The process of curriculum development is described in detail elsewhere [18]. The group that defined the initial version of the outcomes involved registrars and specialists. Based on the CanMEDS framework (the Canadian competency model), we defined and agreed on outcomes that we adapted to our German system of medical training in anaesthesiology. Outcomes were developed in order to structure future residency training at our institution; for the list of outcomes, please see Table 1 [Tab. 1] [12]. The seven CanMEDS Roles of the medical expert, scholar, collaborator, communicator, manager, professional, and health advocate were adapted and further defined by describing factors (i.e., outcomes). These roles and outcomes were adapted in order to structure, complete, and integrate the learning objectives defined by the local medical board.

Table 1: List of roles and competencies

In 2007, we conducted a survey among all the physicians working at our institution to validate these adapted roles and outcomes. With this validation process we intended to achieve broader acceptance of outcomes and greater involvement of both registrars and specialists. Additionally, we investigated whether there were any differences in ratings between consultants, fellows, and registrars as well as male and female physicians.

Methods

Instruments

The survey was conducted according to a structured questionnaire based on the outcomes defined at our institution as described above. The questionnaire included all outcomes (see Table 1 [Tab. 1]). The survey was shown to all consultants of our institution before the survey was initiated, in order to explain content and assure its understanding. Consultants gave their feedback, which included highlighting some items as too complex. They also recommended separation of some items, commented on unclear formulations, and decided on the process of how items should be rated. We then reviewed and optimised the questionnaire. Items of outcome were rated analogously to German school marks, on a 5 point Likert-like scale from 1 (highly relevant) to 5 (not at all relevant). The survey and questionnaire were approved by the Local Ethics Committee, and the survey was conducted over a four-week period during May of 2007.

Participants

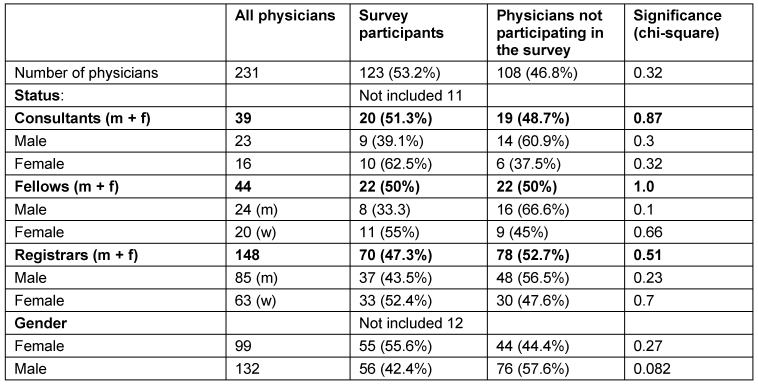

All 231 physicians of the Department of Anaesthesiology and Intensive Care Medicine at the Charité University Medical Centre in Berlin, at Campus Mitte and Campus Virchow, were asked to complete the questionnaire. Of these, 39 were consultants, 44 fellows and 148 registrars (see Table 2 [Tab. 2]). Medical students and other temporary staff were excluded. The survey was announced verbally and by e-mail. During the period the survey was conducted, physicians were reminded by weekly e-mails.

Table 2: Comparison of all physicians and survey participants

Statistical analysis

Descriptive and exploratory statistical analysis was performed with SPSS 12.0. Mean values and standard deviations of agreement ratings on the Likert-like scale were calculated for each item. To explore the internal consistency of the questionnaire, we calculated Cronbach’s alpha for the items of each of the CanMEDS Roles. Cronbach’s alpha >0.7 was defined as acceptable and Cronbach’s alpha >0.8, as satisfactory. We conducted analysis of variance to evaluate differences between groups.

Results

A total of 123 out of 231 physicians completed the questionnaire, yielding a response rate of 53.2%. According to chi-square-tests, groups of responders did not differ significantly in regard to status (number of consultants, fellows, and registrars) or gender, indicating a representative survey (see Table 2 [Tab. 2]: Comparison of all physicians and survey participants). Eleven physicians completing the survey had not specified their qualification, and twelve physicians completing the survey had failed to indicate their gender. Their questionnaires were therefore excluded from data analysis.

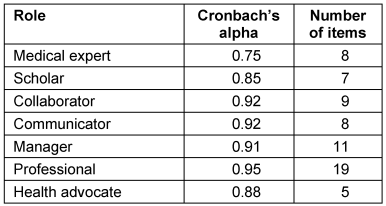

Cronbach’s alpha was acceptable for the role of medical expert and satisfactory for all other roles; see Table 3 [Tab. 3]: Calculation of Cronbach’s alpha. Calculated values ranged between 0.75 and 0.95.

Table 3: Calculation of Cronbach’s alpha

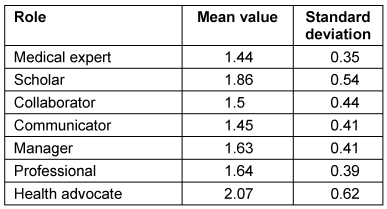

Mean values were calculated from the sum of items for each role. Owing to incorrect questionnaires, we were able to include only 108 of 123 questionnaires. Average agreement ranged between 1.44 and 2.07 on a Likert-like scale. The low value indicates high overall agreement regarding relevance of the described roles and items (see Table 4 [Tab. 4]).

Table 4: Mean value of roles (n=108)

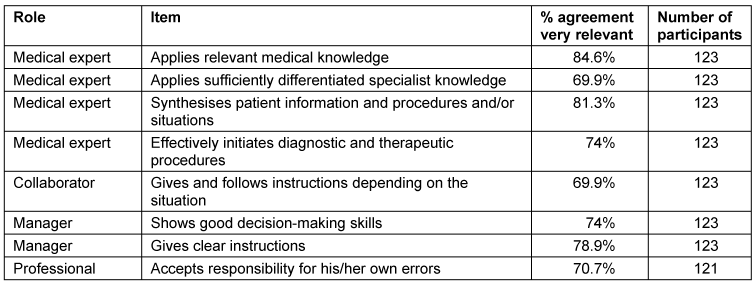

In order to prioritize items, we identified relevant and less relevant items. Items were considered as highly relevant once ≥70% of responders rated an item as very relevant. Very relevant items in the role of the medical expert were “Applies relevant medical knowledge” (84.6%, n=123), “Applies sufficiently differentiated specialist knowledge” (69.9%, n=123), “Synthesises patient information and procedures and/or situations” (81.3%, n=123)‚ “Effectively initiates diagnostic and therapeutic procedures” (74%, n=123).

Further items rated as very relevant derived from the role of collaborator: “Gives and follows instructions depending on the situation” (69.9%, n=123); from the role of manager: “Shows good decision-making skills” (74%, n=123) and “Gives clear instructions” (78.9%, n=123); and from the role of professional: “Accepts responsibility for his/her own errors” (70.7%, n=121), as shown in Table 5 [Tab. 5].

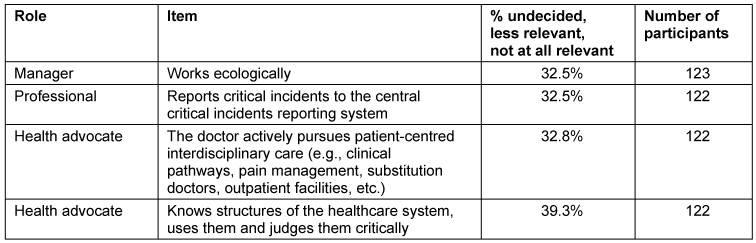

Items that were rated as indifferent, less relevant, or not at all relevant from more than 30% of responders were the following items from the role of manager: “Works ecologically” (32.5%, n=123), from the role of professional: “Reports critical incidents to the central critical incidents reporting system (CIRS)” (32.5%, n=122); and from the role of health advocate: “The doctor actively pursues patient-centred interdisciplinary care (e.g., clinical pathways, pain management, substitution doctors, outpatient facilities, etc.)” (32.8% n=122) as well as‚ “Knows structures of the healthcare system, uses them, and judges them critically” (39.3%, n=122), see Table 6 [Tab. 6].

Comparison of different groups of survey participants

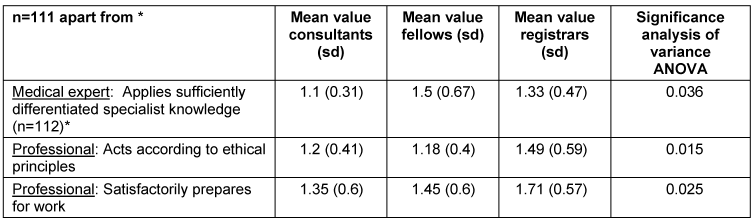

To detect differences among groups of responders, we conducted analysis of variance (ANOVA). We observed significant differences (p<0.05) among the registrars, consultants and fellows for the following items: For the role of medical expert:” “Applies sufficiently differentiated specialist knowledge”; for the role of professional: “Acts according to ethical principles” and “Satisfactorily prepares for work”. See Table 7 [Tab. 7].

Table 7: Comparison of all groups

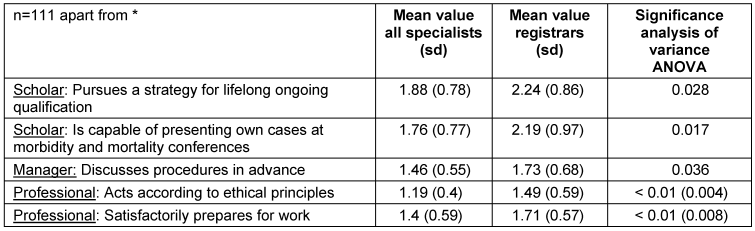

Comparison of fellows and registrars: fellows rated the following items as significantly more relevant than registrars (p<0.01): From the role of professional: “Acts according to ethical principles”, “Satisfactorily prepares for work”. Additionally, all specialists (with and without supervising duty) rated the following items as significantly more relevant than registrars (p<0.05): From the role scholar: “Pursues a strategy for lifelong ongoing learning”, “Is capable of presenting own cases at morbidity and mortality conferences”; from the role of manager: “Discusses procedures in advance”. See Table 8 [Tab. 8].

Table 8: Comparison of all specialists (consultants and fellows) vs. registrars

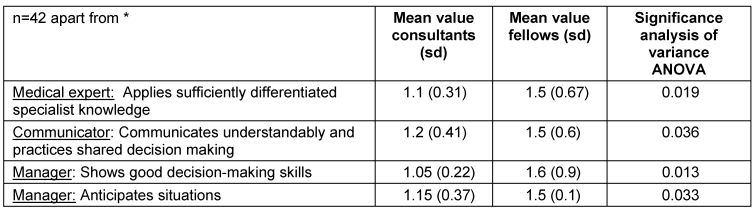

The following items were rated as significantly more relevant from consultants vs. fellows (p<0.05): for the role of medical expert: “Applies sufficiently differentiated specialist knowledge”; for the role of communicator: “Communicates understandably and practices shared decision making”; for the role of manager: “Shows good decision-making skills” and “Anticipates situations”. See Table 9 [Tab. 9].

Table 9: Comparison of consultants vs. fellows

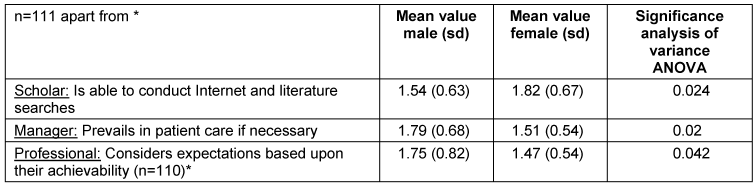

Male responders rated the following items as significantly more relevant than did their female colleagues: From the role of scholar: “Is able to conduct Internet and literature searches”. Female responders rated the following items as significantly more relevant than did their male colleagues (p<0.05): from the role of manager: “Prevails in patient care if necessary”; from the role of professional: “Considers expectations based upon their achievability”. See Table 10 [Tab. 10].

Table 10: Comparison between male vs. female participants

Discussion

To develop a competency-based curriculum for residency training, we adapted the Canadian CanMEDS Roles to our needs and validated the adapted items by conducting a survey [12]. Until now, many countries have adopted the CanMEDS framework for their curriculum development in residency training: e.g., Denmark, the Netherlands, Switzerland, Australia, and New Zealand. The CanMEDS framework was developed in Canada. Its rapid international acceptance indicates effective applicability in European countries. Ringsted and coworkers conducted a survey among Danish physicians that showed good acceptance of the CanMEDS framework in their country [19], [20], [21], [22]. Our adaptation of the CanMEDS framework for residency training in anaesthesiology was considered as relevant by all anaesthetists working at that time at our institution, which indicates broad acceptance of this model at an anaesthesiology department in Germany. The Danish survey showed greatest relevance for communicating with patients and relatives, which is similar to our data showing the high relevance of this role as well. In the Danish survey, the role of collaborator was rated as second least relevant. In contrast to their findings, this role was considered as relevant in our survey. This finding might be explained by specialty-related differences. The survey in Denmark was conducted in all medical specialties. However, our survey was conducted in the field of anaesthesiology, where interdisciplinary and inter-professional collaboration – particularly with nursing staff – is extremely important. In both surveys the role of the health advocate was considered as least relevant [19]. A Canadian survey documented uncertainty about teaching and assessment of the role of health advocate [23]. This could partially explain the low ratings observed in our survey. Additionally, both the Danish survey and our work involved physicians only, which could indicate another possible reason for the low rating of this particular role.

The definition of roles and their competency outcomes is an instrument for organization of the process of specialist training to render it more effective and transparent. A key challenge to this process is posed by scarce resources regarding time and staff, which highlights the importance of employing resources as effectively as possible. The roles of medical expert, collaborator, and communicator were considered in our survey as the three most important. Owing to prevailing limitations of resources, these roles should be integrated on a highest-priority basis in the curriculum, with similarly relevant items likewise enjoying respective priority. Interpretation of our data is limited because we conducted our study at one institution with a selected group (anaesthetists). Response rate of 53% is acceptable in light of other surveys in the field [1], [2], [3], [4], [7], [19]. In addition, our data were collected in 2007, and a new generation of doctors and patients may generate other results. It is therefore necessary to conduct an updated German survey on these roles and their competencies with respect to the future specialist in anaesthesiology. A survey among members of the German Association of Anaesthetists and Intensive-Care Specialists (Deutsche Gesellschaft fuer Anaesthesie und Intensivmedizin, DGAI) could represent a possibility for generation of more valid data.

In our survey, only a few significant differences among the different groups of responders were observed: e.g., between registrars and specialists. The Danish survey disclosed similar results, but differences in relevance of roles were observed among the various specialties [19]. This indicates that relevance of curriculum content can be established by both registrars and specialists. Both groups should therefore be involved more actively in curriculum planning in Germany, as currently pursued in many other countries [12], [21]. Future evaluations of the relevance of the various roles or competency-based outcomes should also involve other health-care professionals as well as patients [24].

We calculated Cronbach’s alpha to assess internal consistency of the roles. There was a relatively low value for the scale of the medical expert. All other scales showed good results. Ringsted and coworkers found similar values for internal consistency [19]. These results may be due to the fact that the role of the medical expert involves items of knowledge (basic and specialty) as well as skills. Separation of these items could possibly lead to better results, but international comparison of roles would be more difficult.

Adopting a competency-based model is intended to enhance the structure and transparency of our residency curriculum. The survey showed broad acceptance for all roles and underlying items. Various learning activities (e.g., lecturing, practical training, on-the-job feedback, literature search, etc.) must be evaluated for efficiency. Learning in practice should receive priority, since it typically offers more satisfactory learning than does lecture-based components [25].

Notes

Competing interests

The authors declare that they have no competing interests.

References

[1] Goldmann K, Steinfeldt T, Wulf H. Die Weiterbildung für Anästhesiologie an deutschen Universitätskliniken aus Sicht der Ausbilder - Ergebnisse einer bundesweiten Umfrage. Anästhesiol Intensivmed Notfallmed Schmerzther. 2006;41(4):204-12. DOI: 10.1055/s-2006-925367[2] Lehmann KA, Schultz JH. Zur Lage der anästhesiologischen Weiter- und Fortbildung in Deutschland, Ergebnisse einer Repräsentativumfrage. Anästhesist. 2001;50(4):248-61. DOI: 10.1007/s001010170028

[3] Prien T, Siebolds M. Beurteilung der Facharztweiterbildung durch Ärzte in Weiterbildung anhand eines validierten Fragebogens. Anästh Intensivmed. 2004;45(1):25-31.

[4] Radtke RM, Hahnenkamp K. Weiterbildung im klinischen Alltag: Bestandsaufnahme und Strategien. Anästh Intensivmed. 2007;48(5):240-50.

[5] Frank JR, Danoff D. The CanMEDS initiative: implementing an outcomes-based framework of physician competencies. Med Teach. 2007;29(7):642-7. DOI: 10.1080/01421590701746983

[6] Swing SR. The ACGME outcome project: retrospective and prospective. Med Teach. 2007;29(7):648-54. DOI: 10.1080/01421590701392903

[7] Hoeft K, Güntert A. Die Weiterbildungskultur muss sich entwickeln. Arzt und Krankenhaus. 2010;10:292-9.

[8] Eidgenössische Technische Hochschule Zürich (ETH Zürich); Institute for Environmental Decisions (IED), Consumer Behavior; Bundesärztekammer. Ergebnisse der Evaluation der Weiterbildung - 1. Befragungsrunde 2009, Bundesrapport. In: Bundesärztekammer, ed. Evaluation der Weiterbildung in Deutschland. Online-Befragung 2009. 2010. Available from: http://www.bundesaerztekammer.de/downloads/eva_bundesrapport_final_16042010.pdf

[9] Hibbeler B, Korzilius H. Evaluation der Weiterbildung: ein erster Schritt. Dtsch Ärztebl. 2010;107(10):A417-20. Available from: http://www.aerzteblatt.de/v4/archiv/pdf.asp?id=68064

[10] Paterson Davenport LA, Hesketh EA, Macpherson SG, Harden RM. Exit learning outcomes for the PRHO year: an evidence base for informed decisions. Med Educ. 2004;38(1):67-80. DOI: 10.1111/j.1365-2923.2004.01736.x

[11] Ringsted C, Henriksen AH, Skaarup AM, Van der Vleuten CP. Educational impact of in-training assessment (ITA) in postgraduate medical education: a qualitative study of an ITA programme in actual practice. Med Educ. 2004;38(7):767-77. DOI: 10.1111/j.1365-2929.2004.01841.x

[12] The Royal College of Physicians and Surgeons of Canada. CanMEDS 2005 Framework. 1st ed. Ottawa: The Royal College of Physicians and Surgeons of Canada; 2005.

[13] Brown AK, Roberts TE, O'connor PJ, Wakefield RJ, Karim Z, Emery P. The development of an evidence-based educational framework to facilitate the training of competent rheumatologist ultrasonographers. Rheumatology (Oxford). 2007;46(3):391-7. DOI: 10.1093/rheumatology/kel415

[14] Leung WC. Competency based medical training: review. BMJ. 2002;325(7366):693-6. DOI: 10.1136/bmj.325.7366.693

[15] Harden J R, Crosby M H, Davis M, Friedman RM. AMEE Guide No. 14: Outcome-based education: Part 5-From competency to meta-competency: a model for the specification of learning outcomes. Med Teach. 1999;21(6):546-52. DOI: 10.1080/01421599978951

[16] Frank JR, ed. The CanMEDS 2005 Physician Competency Framework. Better standards. Better physicians. Better care. Ottawa: The Royal College of Physicians and Surgeons of Canada; 2005. Available from: http://meds.queensu.ca/medicine/obgyn/pdf/CanMEDS2005.booklet.pdf

[17] Calder J. Survey research methods. Med Educ. 1998;32(6):638-52. DOI: 10.1046/j.1365-2923.1998.00227.x

[18] Ortwein H, Dirkmorfeld L, Haase U, Herold KF, Marz S, Rehberg B, et al. Zielorientierte Ausbildung als Steuerungsinstrument für die Facharztweiterbildung in der Anästhesiologie. Anästh Intensivmed. 2007;48(7):420-9.

[19] Ringsted C, Hansen TL, Davis D, Scherpbier A. Are some of the challenging aspects of the CanMEDS roles valid outside Canada? Med Educ. 2006;40(8):807-15. DOI: 10.1111/j.1365-2929.2006.02525.x

[20] Lillevang G, Bugge L, Beck H, Joost-Rethans J, Ringsted C. Evaluation of a national process of reforming curricula in postgraduate medical education. Med Teach. 2009;31(6):e260-6. DOI: 10.1080/01421590802637966

[21] Scheele F, Teunissen P, Van Luijk S, Heineman E, Fluit L, Mulder H, Meininger A, Wijnen-Meijer M, Glas G, Sluiter H, Hummel T. Introducing competency-based postgraduate medical education in the Netherlands. Med Teach. 2008;30(3):248-53. DOI: 10.1080/01421590801993022

[22] Pasch T, Zalunardo MP, Orlow P, Siegrist M, Giger M. Weiterbildung zum Facharzt für Anästhesiologie in der Schweiz. Anästh Intensivmed. 2008;49(5):270-80.

[23] Verma S, Flynn L, Seguin R. Faculty's and residents' perceptions of teaching and evaluating the role of health advocate: a study at one Canadian university. Acad Med. 2005;80(1):103-8. DOI: 10.1097/00001888-200501000-00024

[24] The Royal College of Physicians and Surgeons of Canada. Skills for the new millennium: report of the societal needs working group: CanMEDS 2000 Project. In: The Royal College of Physicians and Surgeons of Canada, eds. Canadian Medical Education Directions for Specialists 2000 Project. Ottawa; 1996.

[25] Klemperer D. Erfahrungen mit Methoden der systematischen Kompetenzdarlegung und Rezertifizierung in der Medizin in Kanada, Chancen für Deutschland. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz. 2006;49(5):418-25. DOI: 10.1007/s00103-006-1250-7

[26] Brown JP. Closing the communication loop: using readback/hearback to support patient safety. Jt Comm J Qual Saf. 2004;30(8):460-4.